Introduction to AI

Project 4: Multi-agent systems

Summary

This is one of the longer projects (and thus you have more time to complete it). I would estimate that this project is probably more ~15 hours rather than the 6-10 I estimated before. Note, as with all of the projects, graduate students have additional requirements.

By the end of this project, you will have accomplished the following objectives.

- Created a team of agents that effectively coordinate to achieve a goal using planning and multi-agent coordination

- Reused your code from the previous projects to allow the agent to effectively navigate around the environment

Capture the flag (CTF)

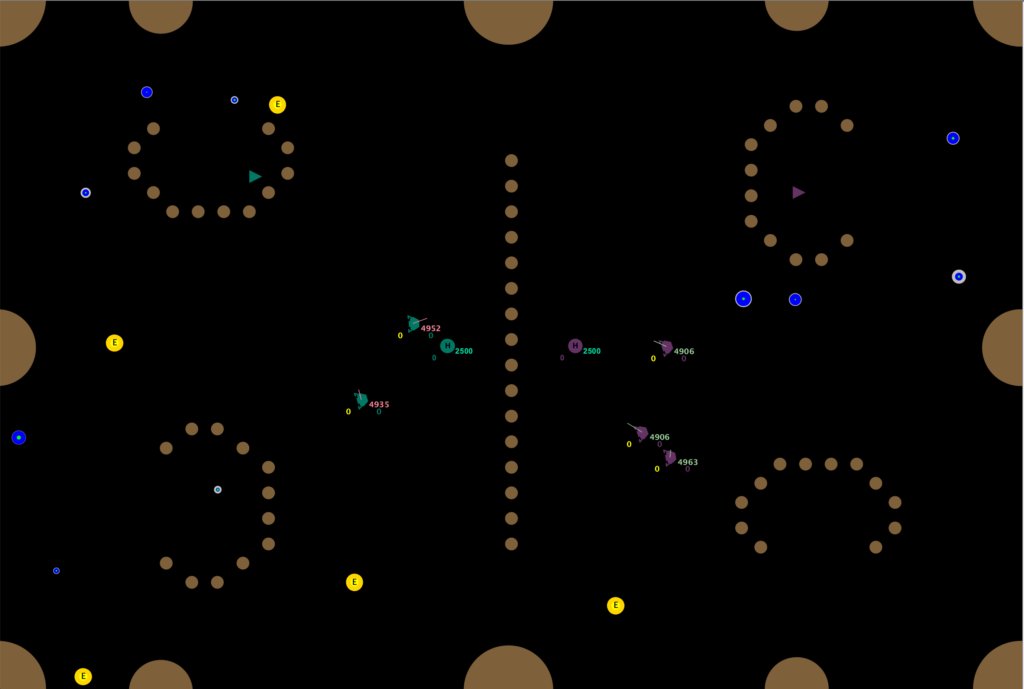

This project focuses on multi-agent systems in a new environment for SpaceSettlers: Capture the Flag (CTF). The rules of the environment are the same as before, with mineable and non-mineable asteroids but a new object has been added: a Flag. A field of non-moveable and non-mineable asteroids has been setup to represent flag alcoves and barriers between the two sides. Each team has a single Flag that starts randomly inside one of the two alcoves for that team. The initial field setup is shown below. The non-mineable asteroids are the same at the start of every game. The small non-mineable asteroids (creating the barrier and alcoves) will remain stationary for the duration of the game. The larger non-mineable asteroids (around the perimeter) will move slowly, adding to the navigational challenges. The mineable and gameable ones continue to be randomly generated and to move around the environment.

CTF specific rules

The rules for the flag are:

- If a ship touches its own flag, it will move to a new random location inside a randomly chosen alcove for that team (could be the same one or could switch, it is chosen randomly).

- If a ship touches the other team’s flag, that ship picks up the flag. This is indicated in the graphics with a little white flag icon inside your ship.

- To count the flag for your team, the ship carrying the flag must bring it back to one of your bases. Once the flag carrier touches the base, your flag will count.

- If a ship dies while carrying the flag, the flag drops where the ship died. It acquires the ship’s velocity at the time of death, so it can move around.

CTF Scoring

There are two scoring mechanisms for Capture the Flag, one for the cooperative approach and one for the competitive approach. In both cases, teams will need to acquire flags. Resources will help teams to obtain flags by enabling teams to buy additional ships, bases, and powerups.

- Cooperative: The score is the total flags collected by both teams in the game MINUS the total kills from both teams (e.g you are penalized for killing people because this is against the goals of the cooperative ladder). This means that your final game score depends on working with the heuristic (and not actively interfering with it).

- Competitive: The score is the total Flags collected by your team, meaning you are directly competing against the other team for total number of flags.

Project 4 tasks

Your task for this project is outlined below. The main foci of this project are planning and multi agent coordination.

- Create a team of at least three ships that coordinate their behavior with one another to achieve the goal of acquiring flags. All ships should move around the environment intelligently. The agents initially are homogeneous but they can acquire capabilities that make them heterogeneous. To ensure you meet this goal, all teams must start with 3 ships. You will need to change your config file to start with the right number of ships. You may purchase up to 10 ships total for your team.

- Identify a set of high-level behaviors appropriate for each ship in your team. Implement the set of high-level behaviors. You are not constrained by a specific method for implementing your agent but you should use what you have learned so far this semester. Try to make effective use of the many types of powerups available to your ships as well as good use of avoidance strategies.

- Implement PLANNING within your team or within a single agent. I suggest that you implement it at the highest level (e.g. deciding which high-level behavior to choose at any time) but you could also use planning at a lower level such as improving A* or other navigation functions or in deciding which powerup to use in a given situation. For each behavior used by your planner, specify a set of pre-conditions and post-conditions, appropriate for use in planning.

- Implement an approach for effective multi-agent coordination. Given the framework of spacesettlers, this is most easily done using a central command agent. If you used planning at the highest level, your coordination can be done using planning. Ensure that you re-plan as frequently as events warrant, such as a high-level action completing or sudden loss in energy. If you put planning at a lower level, then ensure that your agents are effectively coordinating their actions effectively! If they need to communicate with one another, ensure that is also done.

- Integrate methods from other parts of the semester into your agent. For example, use A* if you can. Create a good knowledge representation. Use search effectively.

heuristic agents

There are two new heuristic agents for this project.

- PacifistFlagCollectorTeamClient

- AggressiveFlagCollectorTeamClient

These are both extensions of the Passive or Aggressive Asteroid Collector client. The difference is that both focus on collecting flags as well as asteroids and (in the aggressive case) trying to shoot down enemies. Neither of them does any multi-agent coordination or planning since they are intended to be baseline teams for you to work with or to compete against.

Class-wide ladders – Extra credit

The extra credit ladders remain the same as with Project 1 but a bit more explanation is required so that you fully understand how scoring and ranking is done for CTF. You are welcome to choose a different ladder path than you chose for either of the previous projects. The class-wide ladders will start on Oct 15, 2021.

Cooperative ladder

To earn extra credit on the cooperative ladder, you must outperform the PassiveFlagCollectorTeamClient playing with itself. All teams that outperform (on average and in the nightly ladder run on the server) who beat the PassiveFlagCollectorTeamClient client that we provide will receive one point of extra credit per day that you beat the heuristic, up to a maximum of eight points.

To be very precise, if team A and team B submit to the ladder on a night, the ladder will run the following games:

- n games: A and PassiveFlagCollectorTeamClient

- n games: B and PassiveFlagCollectorTeamClient

- n games: PassiveFlagCollectorTeamClientForEC and PassiveFlagCollectorTeamClientForEC

- n is chosen based on how many students have submitted since the games must finish in a few hours. Fewer submissions = higher n.

The final rankings will be the average score for each team over all of its games. That means A will use the average total flags achieved over its n games with itself and PassiveFlagCollectorTeamClient. Likewise, B will score its average over its n games with itself and PassiveFlagCollectorTeamClient. Finally, the score to beat for extra credit will be the average score of PassiveFlagCollectorTeamClientForEC with PassiveFlagCollectorTeamClientForEC. Team A will NEVER play team B in the cooperative ladder.

Competitive ladder

The competitive ladder is scored using the total Flags collected by your team, meaning you are directly competing against the other team for total number of flags and AI Cores. All games will be played against AggressiveFlagCollectorTeamClient as well as against other students (one at a time). The final rankings will be based on the average number of flags and cores acquired over all games that team played in the ladder. The top scoring student will receive three points of extra credit per night that they remain on top. The second scoring student will receive two points of extra credit per night. The third scoring student will receive one point of extra credit. The maximum credit remains at eight points. Once the maximum extra credit has been reached by a student, the points will trickle down to the next student.

To be very precise, if team A and team B submit to the ladder on a night, the ladder will run the following games:

- n games: A and AggressiveFlagCollectorTeamClient

- n games: B and AggressiveFlagCollectorTeamClient

- n games: A and B

- n games: PassiveFlagCollectorTeamClient and AggressiveFlagCollectorTeamClient

- n games: AggressiveFlagCollectorTeamClient and AggressiveFlagCollectorTeamClient

- n is chosen based on how many students have submitted since the games must finish in a few hours. Fewer submissions = higher n.

The final rankings will be the average number of flags for each team over all of its games. That means A will use the average flags it acquired in all of its games. The score to beat for extra credit will be better of the two average scores of PassiveFlagCollectorTeamClient and AggressiveFlagCollectorTeamClient.

extra credit

The extra credit opportunities for being creative and finding bugs remain the same as in Project 1. Remember you have to document it in your writeup to get the extra credit!

How to download and turn in your project

- Update your code from the last project. You can update your code at the command line with “git pull”. If you did not get the code checked out for project 0, follow the instructions to check out the code in Project 0.

- Note: the directories for config files changes for this project! As do the targets you will want to run in build! Change the SpaceSettlersConfig.xml file in spacesettlers/config/captureTheFlagCompetitive or captureTheFlagCooperative to point to your agent in src/4×4. The detailed instructions for this are in project 0. Make sure to copy over a spacesettlersinit.xml in the src/4×4 directory so your agent knows how to start. In spacesettlersinit.xml change the line <ladderName>Random Client</ ladderName> to the team name you chose in Canvas.

- Write your planning code as described above

- Build and test your code using the ant compilation system within eclipse or using ant on the command line if you are not using eclipse (we highly recommend eclipse or another IDE!). Make sure you use the spacesettlers.graphics system to draw your graph on the screen as well as the path your ship chose using your search method. You can write your own graphics as well but the provided classes should enable you to draw the graph quickly.

- Submit your project on spacesettlers.cs.ou.edu using the submit script as described below. You can submit as many times as you want and we will only grade the last submission.

- Submit ONLY the writeup to the correct Project 4 on canvas: Project 4 for CS 4013 and Project 4 for CS 5013

- Copy your code from your laptop to spacesettlers.cs.ou.edu using the account that was created for you for this class (your username is your 4×4 and the password that you chose in project 0). You can copy using scp or winscp or pscp.

- ssh into spacesettlers.cs.ou.edu

- Make sure your working directory contains all the files you want to turn in. All files should live in the package 4×4. Note: The spacesettlersinit.xml file is required to run your client!

- Submit your file using one of the following commands (be sure your java files come last). You can submit to only ONE ladder. If you submit to both, small green monsters will track you down and deal with you appropriately.

/home/spacewar/bin/submit --config_file spacesettlersinit.xml \ --project project4_coop \ --java_files *.java

/home/spacewar/bin/submit --config_file spacesettlersinit.xml \ --project project4_compete \ --java_files *.java

-

- After the project deadline, the above command will not accept submissions. If you want to turn in your project late, use:

/home/spacewar/bin/submit --config_file spacesettlersinit.xml \ --project project4_coop_late \ --java_files *.java

/home/spacewar/bin/submit --config_file spacesettlersinit.xml \ --project project4_compete_late \ --java_files *.java

Rubric

- Planning

-

20 points for correctly implementing planning within your group’s set of agents. A correct planner will choose among the actions intelligently using PDDL-style planning. Planning code should be well documented to receive full credit.

-

18 points if there is only one minor mistake. An example of a minor mistake would be not re-planning at the correct time or incorrectly specifying the pre or post-conditions for an action.

-

15 points if there are several minor mistakes or if documentation is missing.

-

10 points if you have one major mistake. An example of a major mistake would be not using an pre or post conditions or incorrectly applying them to your state representation.

-

5 points if you accidentally implement a search algorithm other than planning that at least moves the teams around the environment in an intelligent manner.

-

- Multi-agent coordination

-

20 points for correctly implementing multi-agent coordination within your group’s set of agents to achieve a common goal. A correct multi-agent systems will coordinate team behavior intelligently and rarely have issues running into each other and is well documented.

-

18 points if there is only one minor mistake. An example of a minor mistake would be not always coordinating well in a decentralized team or sometimes (rarely!) assigning incorrect roles in a centralized system. Note that errors in planning should count above rather than here. This focuses on the multi-agent coordination itself.

-

15 points if there are several minor mistakes or if it is not well documented.

-

10 points if you have one major mistake. An example of a major mistake would be not frequently failing to coordinate actions.

-

5 points for several major mistakes.

-

- High-level behaviors

-

10 points for making good use of your knowledge from AI so far to create good high-level behaviors with properly documented (in the writeup and the code) action schemas

-

8 points for one minor mistake.

-

5 points for several minor mistakes or one major mistake.

-

3 points for implementing a behavior that is at least somewhat intelligent, even if it doesn’t fulfill the requirements of the high-level behavior specified by your team.

-

- Graphics

- 10 points for correctly drawing graphics that enable you to debug your planning and coordination and that help us to grade it.

- 7 points for drawing something useful for debugging and grading but with bugs in it

- 3 points for major graphical/printing bugs

- Knowledge integration

- 5 points for making good use of your knowledge from AI so far. For example, creative and good knowledge representations or integrating your search methods from earlier would count here. This is a good spot for creativity!

- CS 5013 students only: You must use both multi-agent coordination and other advanced planning mechanisms (such as duration, resources, etc) in your action schemas and implementation

- 10 points for correct use of advanced planning mechanisms

- 5 points for bugs

- Good coding practices: We will randomly choose from one of the following good coding practices to grade for these 10 points. Note that this will be included on every project. Are your files well commented? Are your variable names descriptive (or are they all i, j, and k)? Do you make good use of classes and methods or is the entire project in one big flat file? This will be graded as follows:

- 10 points for well commented code, descriptive variables names or making good use of classes and methods

- 5 points if you have partially commented code, semi-descriptive variable names, or partial use of classes and methods

- 0 points if you have no comments in your code, variables are obscurely named, or all your code is in a single flat method

- Writeup: 15 points total. Your writeup is limited to 2 pages maximum. Any writeup over 2 pages will be automatically given a 0. Turn your writeup in to canvas and your code into spacesettlers.

-

3 points: A full description of the high-level behaviors you chose

-

5 points: A full description of the planning approach you chose. This includes a full description of the goal condition, the state representation, and the schemas pre-conditions and effects for each action.

-

4 points: A description of how you implemented multi-agent coordination

-

3 points: A description of how you integrated your knowledge from other parts of the course into your existing agent.

-